TKGs cluster based on a custom ClusterClass

Between Cluster v1beta1 API and earlier APIs are lot of configuration changes. One of them, is introduced in TKG 2.0 a new resource pool called ClusterClass.

Between Cluster v1beta1 API and earlier APIs are lot of configuration changes. One of them, is introduced in TKG 2.0 a new resource pool called ClusterClass.

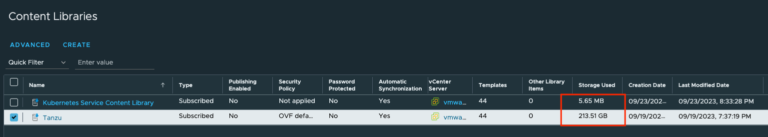

The challenge is easy – vSAN datastore is running out of space and need to be expanded.

The whole process is a quite obvious. If there are free disk slots in a ESXi hosts, get a new drives, install in the servers and reconfigure vSAN capacity pool from the vCenter.

Some time ago, I received a message from users, that they can’t create new services on TKG clusters. The new services had an unusual “pending” status. We didn’t make any major changes to the infrastructure, no upgrades etc…situation like: it worked before, now it doesn’t work.

Last time, I deployed a new cluster. For this task, I used Tanzu Mission Control. Everything goes well, machines was created. Than, I connect to dedicated vSphere namespace and try to list tkc clusters. And a new cluster was not visible…Why?

If you’re using vSphere with Tanzu based on vSphere 7 and vSphere 8, you’ve probably noticed a lot of differences. From enabling Workload Management wizard to a separated section to editing the Supervisor cluster.

vSAN OSA (Original Storage Architecture) is one of two ways for implement a virtual storage solution. Another one is vSAN ESA (Express Storage Architecture). Today I want to focus on the first one. In short, vSAN enables to configure virtual storage across VMware ESXi hosts using local drives.

vSphere Lifecycle Manager (vLCM) was introduced in vSphere 7. This is the next-generation managment tool for updating and keep in sync ESXi fleet. The main goal of the vLCM is to maintain configuration consistency across ESXi hosts in the infrastructure.

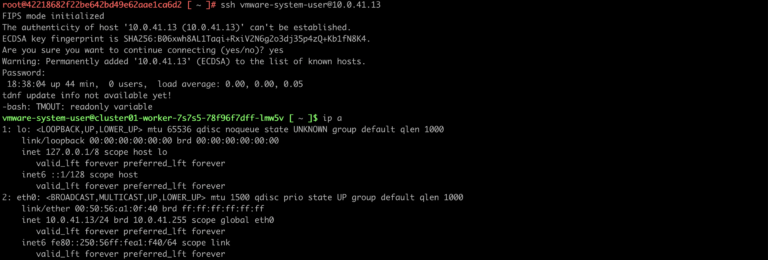

In some situations, we need to check network connections, node configuration, dubug failures, problems or check something from the TKGs control plane or worker node.

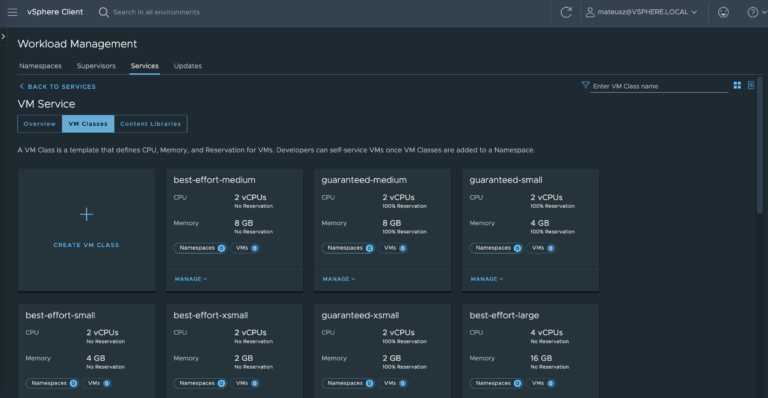

Depending of the project requirements, clients or developers need a big Kubernetes nodes like 32 vCPU and 128 GB of RAM or smaller with only 2 vCPU and 8 GB of RAM. But, what happens if there are not available pre-defined specific node size?

Patching infrastructure is a typical administration task. To maintain and keep infrastructure secure and up to date, it’s important to install updates. Especially the critical ones.

Copyright vmattroman.com © 2026